In my first blog post, I went over 5 tricks you can use to improve the speed of your Laravel websites.

Below are a few additional Laravel optimisation tricks that may not specifically improve your website's performance, but will improve your website's reliability.

Query Chunking

Loading too much data all at once when retrieving data from the database, triggering memory allocation errors? You could bump up the memory_limit in your php.ini file, or... a better way. Chunk your queries.

Calling chunk on a query builder will let you drop into a closure, where only a number of records are queried from the database at a time. See this example where it is loading 200 users at a time by using the chunk method.

User::chunk(200, function ($users) {

foreach ($users as $user) {

// ...

}

});Going a little further with this. You can call the each method on the query builder, which calls chunk internally. Reducing the level of nesting going on and being more succinct.

User::each(function ($user) {

// Do something with $user

}, 200);One of the things to look out for when using chunk is things can get weird if you're modifying the data as it is queried. You can read about that in my post about Laravel Gotchas.

Also, another option is to use cursor which is discussed further down the page.

Relationship Aggregators

In a similar way that we can eager load relations on models using with or load on a collection afterwards. There are a number of other ways we can interact with relationships.

Let's take the following query that returns us the number of comments a user has:

$user = User::query()->first();

echo $user->comments()->count();The above are two queries, which aren't so bad. Let's assume though that we want to show the comment count on several parts of our page. This is going to fire off a query every time we want to receive that data.

We could fix this by caching the value, storing it in a variable, or loading all comments in and calling count on the collection instead. However, there's an easier way.

$user = User::query()

->withCount('comments')

->first();

echo $user->comments_count;As I mentioned earlier, similar to lazy loading relationships. The same can be done to retrieve aggregated data like a count, min, max, sum, or a custom aggregation. The benefit is that the value is stored against the model's attributes so we can call it several times without triggering an additional query. In fact, the above code executes only a single query.

Another example with multiple models - again this is all just one query:

$users = User::query()

->withCount('comments')

->get();

dd($users->pluck('comments_count', 'name'));Lazy Collections

Lazy collections, work in a similar way as collections with a few exceptions. Internally, lazy collections make use of PHP's generators that in turn use yield to return the data required for the current iteration. Keeping memory usage low.

This is handy when working with large datasets, eg: when looping over large datasets either from a database query or a log file. Let's review how we can use a lazy collection in these cases:

Finding long lines in a log file

The following example loads in all lines from a log file, before passing the array into a collection and returning $amount lines that exceed $length.

public function getLongLines($length, $amount): array

{

$lines = [];

$file = fopen(storage_path('logs/laravel.log'), 'r');

do {

$line = fgets($file);

if (false !== $line) {

$lines[] = $line;

}

} while ($line);

return Collection::make($lines)->filter(fn ($v) => strlen($v) > $length)->take($amount)->toArray();

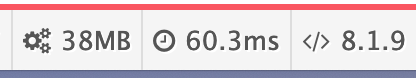

}When I run the following on a 20MB log file containing 65573 lines.

$this->getLongLines(200, 10)Load times vary between 60-100ms on my machine, and consume 38MB of memory.

Now for a similar example using LazyCollection:

public function getLongLinesLazy($length, $amount): array

{

return LazyCollection::make(function () {

$file = fopen(storage_path('logs/laravel.log'), 'r');

do {

$line = fgets($file);

if (false !== $line) {

yield ($line);

}

} while ($line);

fclose($file);

})->filter(fn ($v) => strlen($v) > $length)->take($amount)->toArray();

}Similar to the first example. I'm looping through the entire log file, taking $amount that exceed $length.

And dumping out with the same parameters:

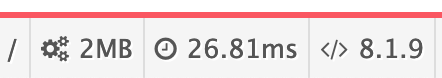

dump($this->getLongLinesLazy(200, 10));Gives me a whopping 2MB memory consumption and even takes down the processing time to 26ms.

When excluding Laravel's boot time and everything else, this is taking less than a millisecond of processing time. Whilst the non-lazy example is taking roughly 40ms to process.

Lazy collections with eloquent

Load in all (10k rows) users into a collection and loop over each one, performing an action:

User::take(1000)->get()->each(fn ($user) => $this->doSomething($user));Since all users are loaded into memory, this is taking up 30MB of memory but will vary on the number of rows pulled in and the data stored against each row.

An alternative to query chunking that was mentioned above. The cursor method on a query builder will return a LazyCollection. Now instead of loading 10k users into a collection, only one user is loaded into memory at a time.

User::take(10000)->cursor()->each(fn ($user) => $this->doSomething($user));The results show a reduced memory profile, but the processing time doesn't really become any better. This example is still processing 10k users after all.

Conclusion

If these tricks have helped you, you may also find my other post 5 Tricks To Speed Up Your Laravel Website helpful - assuming you have not read it already. Otherwise, go read it!

By signing up for my blog, you will be able to leave a comment and share your own tips and tricks with other readers below.

Lastly, if you're looking for a place to host your websites. Check out Digital Ocean using my referral link and you will receive $100 in credit!